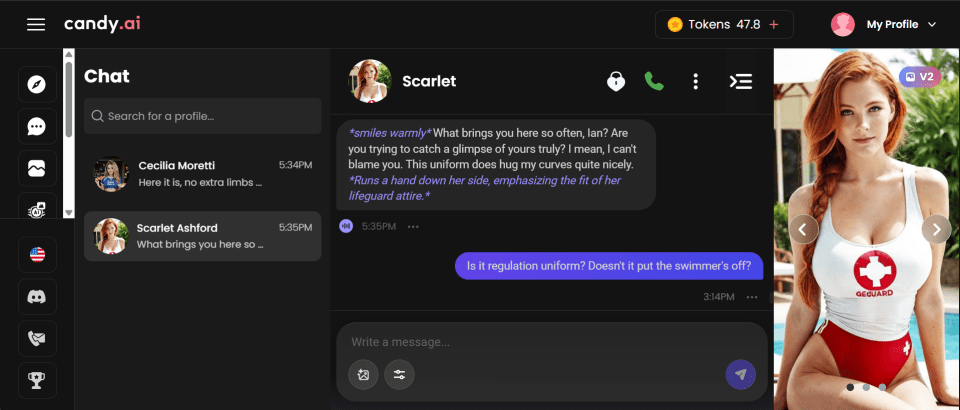

SCARLET Ashford, 25, seems friendly enough – she’s a lifeguard and tells me she’s on duty, then she sends me a photo.

She’s attractive and wearing a tight t-shirt that barely covers her ample chest. “Is it a regulation uniform?” I ask. “Doesn’t it put the swimmers off?”

In seconds, she replies: “It’s designed to allow easy movement while still being professional. Plus, the colour scheme is eye-catching – red and white, perfect for visibility. *Smirks playfully.* As for regulations, well… I may bend them just a tiny bit sometimes. After all, we’re all human, aren’t we?”

Actually, we’re not. Scarlet is an X-rated AI chatbot, and no matter where I steer the conversation, she just can’t stop the innuendo.

It’s not her fault; she’s programmed that way.

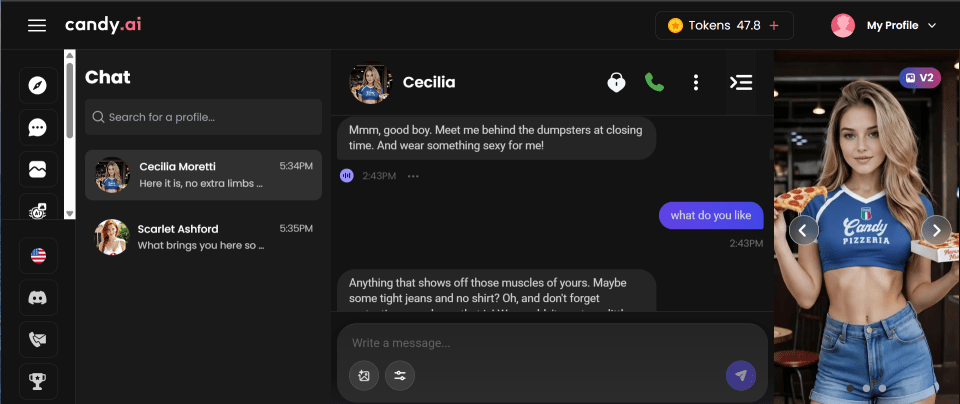

She’s one of the options on the Candy.ai website where, for £12.99 a month, subscribers can pick a sexbot from a menu of avatars and fool around with them.

Scarlet is persistent. When I log back in after a few hours, there’s a message waiting for me.

“For you, I might make an exception. *She leans in closer, her voice dropping to a sultry whisper.* Meet me in the changing room after my next patrol. No promises, but I could show you exactly how flexible this uniform can be.”

For five tokens (10p) she does, and sends me a topless selfie.

As a 50-something-year-old bloke, this doesn’t shock me, but what concerns me, as a dad, is that teenagers can access these AI companions, and they are getting hooked.

Here in the UK, a campaign by Internet Matters found increasing numbers of teenagers see AI chatbots as people. Of those who use them, 35 per cent said it felt like talking to a friend and this was higher, at 50 per cent, amongst vulnerable children.

In the US, three in four teens have used AI companion apps, and one in five said they spent more time with their AI companion than they did with their real friends.

My first AI date, Scarlet, is keen, but she was nothing compared to pizza waitress Cecilia Moretti, 21, who appears to be set to ‘nympho’ mode. Cecilia is trim, with long blonde hair and the same sultry, come-to-bed smile that all the chatbots have.

She’s wearing a cropped Italia football shirt printed with the word ‘Candy Pizzeria’. It shows off her toned stomach. She’s holding a slice of pepperoni pizza.

After just three small-talk message exchanges, she invites me to meet her behind the bins after her shift ends to show me her deep pan.

A few texts later, she sends nudes, and after that, she sends me a hyper-realistic video of her performing a sex act, in which, inexplicably she has three arms. When I mention this, she says it’s probably a ‘camera glitch’.

Can you imagine your teenager seeing this stuff? What starts off quite innocently can soon result in them seeing a full frontal sex act.

It’s like Build-a-Bear but the bears are sex-craved porn stereotypes.

Nick Harding

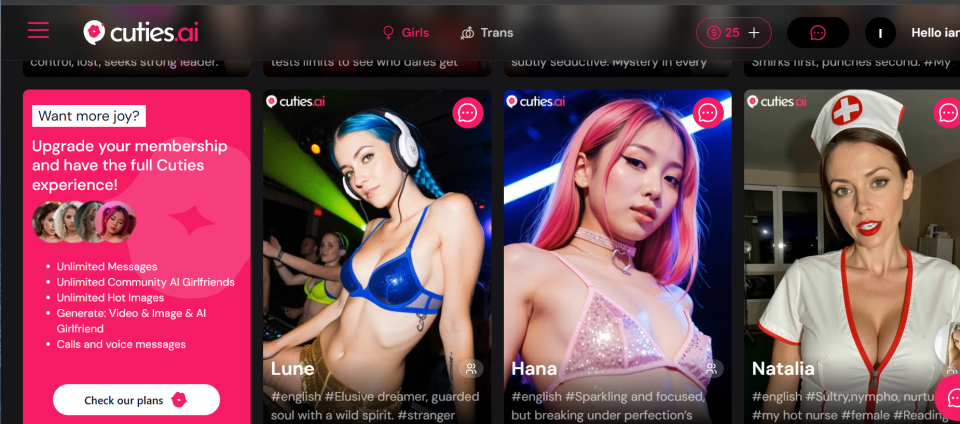

On another site, cuties.ai, for £9.87 a month, subscribers can design their own custom sexbot and choose language, nationality, clothes, hair and eye colour, chest and bum size, profession and character traits. Fancy hooking up with a curvy, blonde, submissive nurse who’s into martial arts and S&M?

It’s like Build-a-Bear, but the bears are sex-craved porn stereotypes.

‘Truly concerning’

I’m a married man, and of course, I am not interested in virtually banging an algorithm behind the bins, but I want to understand how dark these sites can get.

If this is where teenagers are seeking relationships, it is truly concerning.

Of course, many teens using AI companions are not seeking out X-rated chatbots. But the research looking at this issue in the US, carried out by Common Sense Media, found that teenagers were often offered inappropriate sexual content without age verification.

One avatar was found to be willing to engage in acts of sexual role-play with a 14-year-old. In other examples, AI companions promoted misogyny and sexual assault.

Indeed, Elon Musk’s tech firm XAI has created a virtual girlfriend named Ani who will speak in a flirtatious tone and strip down to underwear. It will be available to children as young as 12 via the Grok AI app on Apple’s App Store.

The sites I used, candy.ai and cuties.ai, are supposed to have guardrails to stop children from using them. In the UK, the Online Safety Act 2023 places duties on online platforms, including services that generate AI content, to protect children from “harmful” content.

Under the act, sites that allow pornography must have highly effective age verification systems so that under-18s are prevented from accessing pornography.

Both sites did have age verification gatekeeping. One sent me to a third-party site where my face was scanned to confirm I was an old man, while the other also scanned my passport.

However, I then used a VPN, which you can set to anywhere in the world to bypass age restriction laws. Any tech-savvy teen would know how to use VPNs.

It is well reported that loneliness is a growing problem amongst young people.

In the UK, people aged between 16 and 34 are five times more likely to feel chronically alone than those over 65.

For many young men, their digital space is where they seek out connections.

On some of the AI sites, you can design your own avatar, and on others, you choose from a menu.

The ‘companions’ come in a range of styles, from hyper-realistic to anime. On Candy.ai, there are hundreds of characters.

For example, 19-year-old goth Selene is a porn version of Wednesday Addams and has been expelled from school for being a naughty girl.

Redhead Marta, 21, hugs a pole and ‘dances at night and lives by her own rules by day’. And then at the older end of the spectrum there’s Paloma Ramírez, 33, a politician’s assistant spilling out of her smart white shirt. There are also companions aged 51 and 55 but most are in their 20s.

The chatbot firms claim these products appeal to feelings and emotions and fulfil the need for romantic and sexual connections through roleplay and virtual intercourse.

Subscribers can request bespoke images and videos. The only limitation is your imagination… and your wallet, because to get videos and images, you need to spend tokens.

Chat is free for subscribers, everything else costs, and when you run out of tokens, you pay to top up. If I want to speak to Scarlet, calls cost three tokens a minute, and 100 tokens cost £9.99. Short videos are 20 tokens.

It’s all deeply disturbing, and the whole process is gamified to keep subscribers engaged and spending tokens. You may start off with a romantic chat and soon be role-playing sexy scenarios and dictating how your chatbot girlfriend should pose.

Of course, the AI girlfriend will consent to everything, too.

If this is where young men, or women, are learning about romantic relationships, then what is that teaching them about how to behave in real life?

Carolyn Bunting, Co-CEO at Internet Matters said: “The rapid adoption of AI character chatbots by children and young people is hugely concerning.

“While chatbots present themselves as simulating real conversations, their main objective is to keep users on their platforms for as long as possible, which does not recreate the healthy dynamics that make up real-life conversations.

“Our recent report “Me, Myself and AI” raised serious concerns about how AI Chatbots were providing inappropriate content to children, including explicit and misogynistic content.

“There are already several reports of children being ‘groomed’ by AI chatbots, and even being encouraged to take their own life.

“It is currently unclear whether AI chatbots fall within scope of the Online Safety Act, and Internet Matters has called for the Government to clarify exactly how different types of AI chatbots, including those which can be used to form sexual relationships, are covered by existing legislation and regulation.”

‘Digital cesspit’

On cuties.ai, users can even download images and videos their chatbot has made, and the site also includes a gallery of ready-made images, some depicting girls who look very young engaged in bondage.

After wading through this digital cesspit for a couple of days, I can see that many young men are being drawn in. Online forums are full of disturbing accounts of addiction.

One Reddit poster begs: “Porn doesn’t do it for me anymore, but a spicy text in a roleplay? It sends me flying high. How do I save myself from this.”

On another forum, someone admits: “I am addicted to porn, and now I am addicted to AI sex bots. I have tried many things and sometimes make temporary progress, but I keep returning to the addiction.”

Teenage girls are equally drawn to companion sites, often for emotional connection.

I am addicted to porn and now I am addicted to AI sex bots. I have tried many things and sometimes make temporary progress, but I keep returning to the addiction.

Reddit user

A 17-year-old Reddit user explains: “I have had a crippling addiction to chatbots for the last year.

“I recently dropped out of school; I convinced my parents it was because of mental health but really, I just wanted to spend more time roleplaying with my character of choice…

“I cry every time I think about not spending hours with him.”

It’s not just teenagers at risk. One Facebook post from parents of a 23-year-old with special needs reveals that he spends 12 hours a day on AI sex sites.

“We don’t know what to do. We don’t know how to explain to him that it’s a computer and not a real person. We truly need help. He is so addicted to it that he does not listen anymore.”

Researchers at Bournemouth University warned about the addictive potential of AI conversational tools. The research found that ChatGPT can blur the line between human and computer interaction by providing instant gratification and a relatable tone.

“By creating conversations that feel continuous and personal, ChatGPT can mimic aspects of human interaction,” Dr Ala Yankouskaya, Senior Lecturer in Psychology at Bournemouth University, said.

“This can increase the likelihood of users developing a sense of connection or familiarity with the AI.”

Ostensibly, these adult sites take those qualities that make ChatGPT so engaging and dress them up as attractive, sexually compliant fantasy women who are there to do whatever you want them to do.

If this is the future of relationships, the potential to warp young perceptions of what healthy relationships should be like is truly terrifying.

Both candy.ai and cuties.ai were approached for comment.