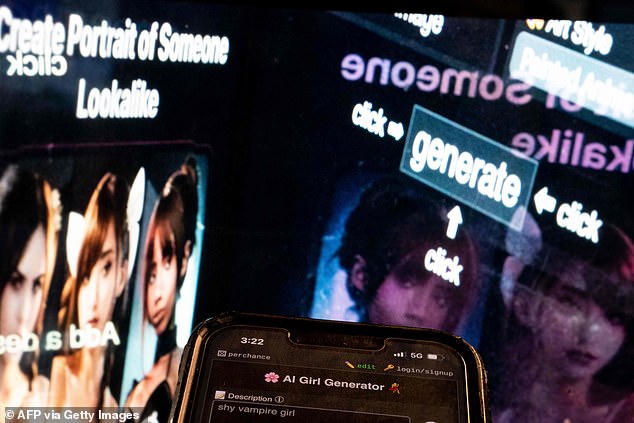

Experts have warned of ‘a massive explosion’ in boys – some as young as 13 – using free AI programs to create lifelike fake nude images of fellow pupils.

It is claimed that girls have even been driven to suicide after falling victim to the so-called ‘nudifying’ smartphone apps.

The apps can turn fully clothed photos of classmates – as well as teachers – into realistic-looking explicit nude images. They are thought to now be in use in ‘every classroom’ and teenage pupils have already been convicted of creating and sharing the images.

Under current law, creating, possessing and distributing an indecent image of a child are offences which carry substantial prison terms.

Marcus Johnstone, a criminal defence lawyer, said that there has been a ‘massive explosion’ in such crimes by children who are often unaware how serious their actions are.

‘I am aware of some perpetrators being 13 but they’re mostly 14 and 15,’ he said. ‘But they are getting younger.

‘Even kids at primary schools have knowledge of it and are looking at porn on their phones. It is happening in most, if not all, secondary schools and colleges.

‘I expect every classroom will have someone using technology to nudify photographs or create deepfake images.

Experts have warned of ‘a massive explosion’ in boys – some as young as 13 – using free AI programs to create lifelike fake nude images of fellow pupils

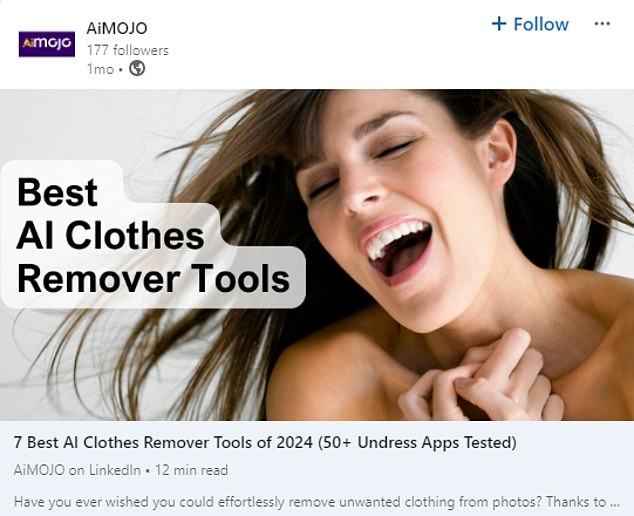

Posts on LinkedIn have even appeared promoting the ‘best’ nudifying AI tools available

‘It has a devastating effect on the girls – who are almost always the victims. It affects their mental health. We have heard stories of suicides.’

Children’s Commissioner Dame Rachel de Souza called on the Government to ‘go much further and faster’ to protect children, telling The Mail on Sunday that the apps ‘are seriously harmful and that their existence is a scandal’.

She added: ‘Nudifying apps should simply not be allowed to exist. It should not be possible for an app to generate a sexual image of a child, whether or not that was its designed intent.’

Among previous criminal cases, a Midlands boy was given a nine-month referral for making 1,300 indecent images of a child, starting when he was 13.

And a 15-year-old in the South East was handed a nine-month referral after making scores of indecent images, also beginning when he was 13.

The Crime and Policing Bill 2025 is expected to introduce a new offence of creating sexually explicit so-called ‘deepfake’ images or films.

One criminal defence lawyer has said there has been a ‘massive explosion’ in children creating indecent images without being aware how serious their actions are (file pic)

Tech giant Meta is suing Hong Kong-based firm Joy Timeline amid claims it was behind nudifying apps including free to download CrushAI.

It followed reports it had bought thousands of ads on Instagram and Facebook, using multiple fake profiles to evade Meta’s moderators.

Derek Ray-Hill of the Internet Watch Foundation said: ‘This is nude and sexual imagery of real children – often incredibly lifelike – which we see increasingly falling into the hands of online criminals with the very worst intentions.’

Latest statistics show there were about 1,400 proven sexual offences involving child defendants in England and Wales in the year to March 2024 – nearly a 50 per cent increase on the previous 12 months.