I am horrified that children are growing up in a world where anyone can take a photo of them and digitally remove their clothes.

They are growing up in a world where anyone can download the building blocks to develop an AI tool, which can create naked photos of real people.

It will soon be illegal to use these building blocks in this way, but they will remain for sale by some of the biggest technology companies meaning they are still open to be misused.

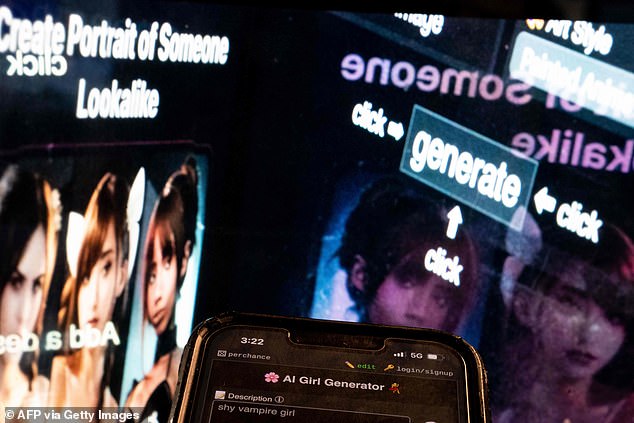

Earlier this year I published research looking at the existence of these apps that use Generative Artificial Intelligence (GenAI) to create fake sexually explicit images through prompts from users.

The report exposed the shocking underworld of deepfakes: it highlighted that nearly all deepfakes in circulation are pornographic in nature, and 99% of them feature girls or women – often because the apps are specifically trained to work on female bodies.

In the past four years as Children’s Commissioner, I have heard from a million children about their lives, their aspirations and their worries.

Of all the worrying trends in online activity children have spoken to me about – from seeing hardcore porn on X to cosmetics and vapes being advertised to them through TikTok – the evolution of ‘nudifying’ apps to become tools that aid in the abuse and exploitation of children is perhaps the most mind-boggling.

As one 16-year-old girl asked me: ‘Do you know what the purpose of deepfake is? Because I don’t see any positives.’

Pictured: Dame Rachel de Souza, Children’s Commissioner for England

Experts have warned of ‘a massive explosion’ in boys – some as young as 13 – using free AI programs to create lifelike fake nude images of fellow pupils

‘Nudifying apps should simply not be allowed to exist,’ the Children’s Commissioner for England said (file pic)

Children, especially girls, are growing up fearing that a smartphone might at any point be used as a way of manipulating them.

Girls tell me they’re taking steps to keep themselves safe online in the same way we have come to expect in real life, like not walking home alone at night.

For boys, the risks are different but equally harmful: studies have identified online communities of teenage boys sharing dangerous material are an emerging threat to radicalisation and extremism.

The government is rightly taking some welcome steps to limit the dangers of AI. Through its Crime and Policing Bill, it will become illegal to possess, create or distribute AI tools designed to create child sexual abuse material.

And the introduction of the Online Safety Act – and new regulations by Ofcom to protect children – marks a moment for optimism that real change is possible.

But what children have told me, from their own experiences, is that we must go much further and faster.

The way AI apps are developed is shrouded in secrecy. There is no oversight, no testing of whether they can be used for illegal purposes, no consideration of the inadvertent risks to younger users. That must change.

Nudifying apps should simply not be allowed to exist. It should not be possible for an app to generate a sexual image of a child, whether or not that was its designed intent.

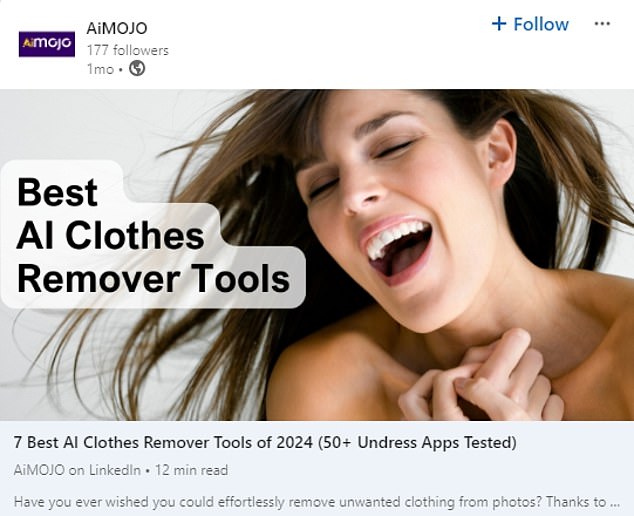

Posts on LinkedIn have even appeared promoting the ‘best’ nudifying AI tools available

The technology used by these tools to create sexually explicit images is complex. It is designed to distort reality, to fixate and fascinate the user – and it confronts children with concepts they cannot yet understand.

I should not have to tell the government to bring in protections for children to stop these building blocks from being arranged in this way.

I welcome the move to criminalise individuals for creating child sexual abuse image generators but urge the government to move the tools that would allow predators to create sexually explicit deepfake images out of reach altogether.

To do this, I have asked the government to require technology companies who provide opensource AI models – the building blocks of AI tools – to test their products for their capacity to be used for illegal and harmful activity.

These are all things children have told me they want. They will help stop sexual imagery involving children becoming normalised.

And they will make a significant effort in meeting the government’s admirable mission to halve violence against women and girls, who are almost exclusively the subjects of these sexual deepfakes.

Harms to children online are not inevitable. We cannot shrug our shoulders in defeat and claim it’s impossible to remove the risks from evolving technology.

We cannot dismiss it this growing online threat as a ‘classroom problem’ – because evidence from my survey of school and college leaders shows that the vast majority already restrict phone use: 90% of secondary schools and 99.8% of primary schools.

Rachel de Souza has said the Online Safety Act ‘marks a moment for optimism that real change is possible’

Yet, despite those restrictions, in the same survey of around 19,000 school leaders, they told me online safety is among the most pressing issue facing children in their communities.

For them, it is children’s access to screens in the hours outside of school that worries them the most. Education is only part of the solution. The challenge begins at home.

We must not outsource parenting to our schools and teachers.

As parents it can feel overwhelming to try and navigate the same technology as our children. How do we enforce boundaries on things that move too quickly for us to follow?

But that’s exactly what children have told me they want from their parents: limitations, rules and protection from falling down a rabbit hole of scrolling.

Two years ago, I brought together teenagers and young adults to ask, if they could turn back the clock, what advice they wished they had been given before owning a phone.

Invariably those 16-21-year-olds agreed they had all been given a phone too young.

They also told me they wished their parents had talked to them about the things they saw online – not just as a one off, but regularly, openly, and without stigma.

Later this year I’ll be repeating that piece of work to produce new guidance for parents – because they deserve to feel confident setting boundaries on phone use, even when it’s far outside their comfort zone.

I want them to feel empowered to make decisions for their own families, whether that’s not allowing their child to have an internet-enabled phone too young, enforcing screen-time limits while at home, or insisting on keeping phones downstairs and out of bedrooms overnight.

Parents also deserve to be confident that the companies behind the technology on our children’s screens are playing their part.

Just last month, new regulations by Ofcom came into force, through the Online Safety Act, that will mean tech companies must now to identify and tackle the risks to children on their platforms – or face consequences.

This is long overdue, because for too long tech developers have been allowed to turn a blind eye to the risks to young users on their platforms – even as children tell them what they are seeing.

If these regulations are to remain effective and fit for the future, they have to keep pace with emerging technology – nothing can be too hard to tackle.

The government has the opportunity to bring in AI product testing against illegal and harmful activity in the AI Bill, which I urge the government to introduce in the coming parliamentary session.

It will rightly make technology companies responsible for their tools being used for illegal purposes.

We owe it to our children, and the generations of children to come, to stop these harms in their tracks.

Nudifying apps must never be accepted as just another restriction placed on our children’s freedom, or one more risk to their mental wellbeing.

They have no value in a society where we value the safety and sanctity of childhood or family life.